This blog details problems that you will definitely encounter if you use the BizTalk WCF-WebHttp Receive Adapter in tandem with Azure Service Bus Relays (at least in BizTalk 2013 I haven’t confirmed if the problem has been fixed since but I doubt it). The problem is that if the service consumer tries to append URL parameters to the Service Bus Relay URL then they will be met with the dreaded AddressFilterMismatch exception. The traditional means of working around AddressFilterMismatch exceptions also leads to more problems which I will describe in this post. I have come up with a generic solution that ensures that you will not encounter such problems and that using the WCF-WebHttp BizTalk adapter in Receive Locations in tandem with Service Bus Relays is viable.

In this blog post I’ve detailed the problem, explained why it occurs, and explained how the solution works as well. If all you’re interested in is using the solution then you can download the installer here (or here if your machine is 32-bit), or the source code here.

The installer will GAC the relevant dll, and add a Service Behavior called BTWebHTTPSBRelayCompatibility to your machine.config files (if you prefer not to have your machine.config file updated then please don’t use the installer, download the source code and use the behavior as you wish). You will need to use this Service Behavior on your BizTalk WCF-WebHttp receive location to fix the problem detailed in this post. Please ensure you test the installer and the behavior before you attempt to use it in a real life environment, and let me know if you have any feedback.

Preamble about WCF-WebHttp Adapter and Service Bus Relays

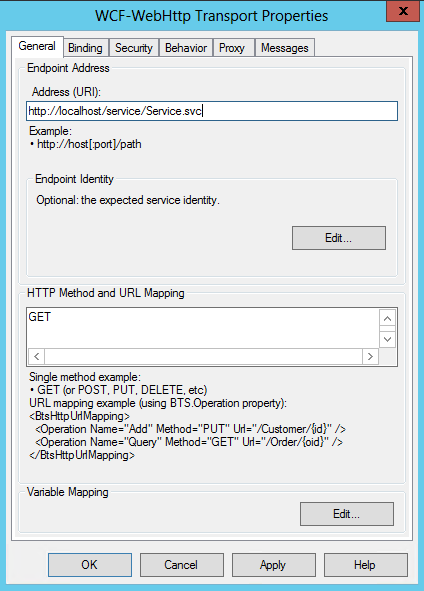

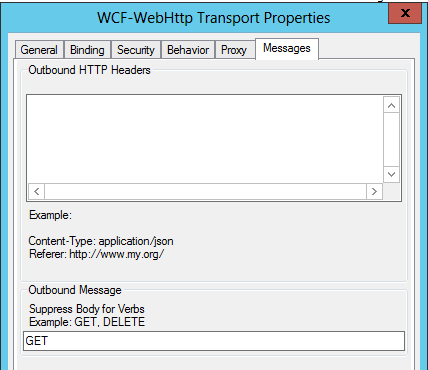

Adding a relay endpoint to your WCF-WebHttp receive location is a piece of cake. When running through the WCF Service Publishing Wizard, you just need to ensure you tick the checkbox in the below screenshot and you will be presented with additional screens at the final stages of the wizard that allow you to choose a service bus namespace and specify the ACS credentials that BizTalk uses to connect to the relay (I’ve found a way to get this to work with SAS, but more on this in another blog post).

The wizard doesn’t actually create the relay for you. This is done at the time the service is started up based on entries that get placed in the service’s web.config file as a result of the wizard (you could even manually add the web.config sections if you missed the relevant wizard options). Note in the below screenshot that an additional endpoint that uses the webHttpRelayBinding binding has been added to the web.config file. The beauty of this is that you can change the relay endpoint URL anytime by just updating the web.config.

Now when the service spins up you’ll find that the relay has been enlisted automatically for you. If you tear the service down, for example by taking down the application pool or stopping IIS, you’ll notice that the relay is no longer listed in the Azure Portal.

The Problem

What I found was that when I tried to send requests (regardless of the HTTP method) to the base URL of the relay (which in the above example is https://jctest.servicebus.windows.net/JCTestService/Service1.svc) there were no problems. However the second I tried to add URL parameters to the end of the URL I would get an AddressFilterMismatch error. I also encountered similar problems if I tried to change my relay address URL such that it differs from the URL format of my receive location (for example if I specify the relay URL in my web.config to be a value of https://jctest.servicebus.windows.net/API/V1).

The obvious workaround and why it isn’t good enough

The aforementioned error message shouldn’t be new to anyone who has used the WCF-WSHttp receive location, as similar errors are encountered in scenarios where the URL that service consumers post to doesn’t match the server name (due to a DNS alias/load balancer etc…). The most common solution I’ve seen touted around to get around this problem is to use a WCF Service Behavior that changes the Dispatcher AddressFilter to a MatchAllMessageFilter which basically means that URL matching will not occur. This would be done in the ApplyDispatchBehavior method of the Service Behavior as below.

The nice thing about Service Behaviors is that they apply to all endpoints within our service, including our relay endpoint. Adding this behavior to the behaviors tab of our receive location will actually get around the two aforementioned problems which resulted in AddressFilterMismatch errors, however they introduce a new, very BizTalk specific problem.

You’ll now find that regardless of your URL/Method/Operation mapping on your BizTalk Receive location, the BTS.Operation context property will never be promoted on your inbound message. This is very limiting, because the BTS.Operation context property is a fantastic way to route your message to relevant orchestrations/send ports without having to have their filters based on the URL of the message. Any URL parameters that you tokenized in the mapping will also not be written to the context.

Why the Problem Occurs

Getting to the bottom of the problem required a fair amount of usage of RedGate’s fantastic Reflector tool, I don’t think anyone could possibly figure out the problem without a reflection tool or having access to the source code.

I reflected the assemblies that are used within the BizTalk receive location and found that the way Microsoft implement the URL/Method to context property mapping is via a private Endpoint Behavior which is aptly named InternalEndpointBehavior. Microsoft actually adds this behavior to the default endpoint of the receive location programmatically within the adapter code, and the behavior does not get added to the relay endpoint which is why the relay endpoint behaves differently from the default endpoint.

The InternalEndpointBehavior sets the AddressFilter on the default endpoint to an instance of the WebHttpReceiveLocationEndpointAddressFilter class. This AddressFilter is what does all the magic of promoting the BTS.Operation and other context properties based on the inbound URL/Method. Unfortunately this class is marked as internal as well, so we can’t instantiate an instance of it from a custom behavior.

Since our first solution attempt was to use the MatchAllMessageFilter on our endpoint we would not see this context property promotion occur.

The Solution

The solution I’ve put in place is to create a custom service behavior that performs the below logic in it’s ApplyDispatchBehavior method. You can download all the source code here.

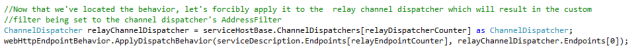

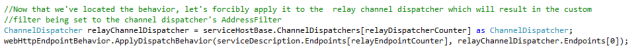

First the behavior needs to figure out which ChannelDispatcher is related to the relay (since we’ll have the relay as well as the default ChannelDispatcher to contend with).

Next the behavior needs to loop through all endpoints, saving the base endpoint addresses for the relay endpoint as well as the default endpoint to local variables (the reason for this will be apparent soon). It also identifies the default endpoint and copies a reference to the contained InternalEndpointBehavior (which as I’ve mentioned applies the WebHttpReceiveLocationEndpointAddressFilter AddressFilter to the endpoint).

Next we’re going to apply the InternalEndpointBehavior to the relay endpoint which will apply the WebHttpReceiveLocationEndpointAddressFilter to the relay endpoint when it is executed.

This actually isn’t the end. This solution is good enough if the URL of the Service Bus relay mirrors the on-premise URL (except for the host name of course), however the second we want our relay URL to differ in structure (perhaps because we want to remove the .SVC extension, or provide a more friendly URL format) we will start to get an AddressFilterMismatch exception. The reason for this is that the URL format that the filter is searching for is based on the default endpoint’s format. And because the filter is an internal class we have no ability to override the expected URL format.

So if your relay endpoint URL is https://jctest.servicebus.windows.net/test/service1.svc and the default endpoint URL is https://servername.onpremises.com/test/service1.svc then you won’t have a problem. But if the relay endpoint URL was https://jctest.servicebus.windows.net/API/V1 then you’d get the dreaded AddressFilterMismatch exception. For the default non-relay endpoint, the typical solution to this would be to use IIS’ URL Rewrite feature as described here, but I have found that this didn’t work for me with the Relay Endpoint.

So to get around this, we need to replace the Service Bus URL with the default endpoint URL in the To WCF Addressing header before the message gets to the AddressFilter. I haven’t found an appropriate component that can change the To SOAP header value before the AdressFilter stage, so the solution I’ve implemented is to delay the execution of the AddressFilter such that it runs in a custom Message Inspector which will first override the To WCF Addressing header value such that it matches the AddressFilter’s accepted format, execute the AddressFilter, and then change the To WCF Addressing header back to it’s original value.

I’ll show you how I’ve achieved the aforementioned solution. We now have the WebHttpReceiveLocationEndpointAddressFilter filter applied to our relay endpoint but this won’t work for us because we can’t run the Message Inspector prior to the filter. So what we’ll do instead is copy a reference to the WebHttpReceiveLocationEndpointAddressFilter filter to a local variable, and change the filter on our relay endpoint to be a MatchAllMessageFilter, which will let pretty much any URL through (don’t worry, we’ll run our WebHttpReceiveLocationEndpointAddressFilter later).

The final step in our behavior is to now instantiate our custom Message Inspector, passing in the base address of the default endpoint, the base address of the relay endpoint and the reference to the WebHttpReceiveLocationEndpointAddressFilter filter object. We then add this Message Inspector to our relay endpoint dispatcher.

The custom Message Inspector is very simple. All it needs to do is override the To WCF Addressing Header from the Relay Endpoint URL format to the default URL format, execute the AddressFilter, and then set the To WCF Addressing Header back to the Relay Endpoint URL format.

The beauty of this solution is that no change is introduced to the default endpoint at all, so you are only changing the behavior of the relay endpoint such that it matches the behavior of the default endpoint. Also, the code in the Service Behavior class will only execute when your service is first spun up. From then on the only code that will execute as each request is received will be the custom message inspector which is pretty lightweight and does very little additional work that isn’t encountered on the default endpoint anyways. You can now set your Service Bus Relay endpoint URL to be whatever you want, and messages will get successfully relayed to your Receive Location with the BTS.Operation and the context properties based on tokenized URL parameters promoted/written to context as expected.

Of course, what would be even better would be if this behavior wasn’t necessary at all and that the BizTalk WCF-WebHttp receive location automatically set the appropriate filter on the relay endpoint for you…maybe Microsoft will adress this in BizTalk Server vNext? 🙂 Until then hopefully this will do the trick for you. If you have any better solutions then please do share them in the comments section.