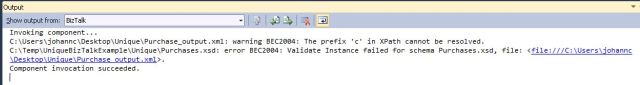

Back in June I wrote a blog post in which I explored how the BizTalk XML Validator pipeline component could be used to prevent duplicate values in repeating records, the duplicate check being scoped to a single element/attribute value or a combination of them (do have a read of the blog post in question for an overview of how this can be done in schemas with an elementFormDefault of unqualified or with no namespaces). However at the time I found a major problem in that I could not figure out the syntax to get this to work with schemas with an elementFormDefault or attributeFormDefault of qualified, and was constantly facing the error “The prefix ‘x’ in XPath cannot be resolved” (x being the relevant namespace prefix I was trying to use in the unique constraint) when executing the XML Validator pipeline component even though the BizTalk project containing said schemas built successfully, and was unable to work around the problem at the time.

While looking at implementing a workaround on a solution I was working on whereby I was going to reverse the elementFormDefault on contained schemas from qualified back to unqualified my colleague Shikhar and myself worked out how to solve the problem encountered with namespace prefixes. Put very simply, it looks like unique constraints in a schema will not respect namespace prefixes declared at the schema level but rather must have the namespace prefixes defined at the constraint level.

For the purpose of this exercise I’ve created a new type schema which contains a complexType definition called ItemMetadata which contains an attribute called DateOfAvailability and an element called Price. The elementFormDefault and attributeFormDefault attributes are both set to qualified on this schema. I’ve then referenced it via an imports statement from the Purchases schema I used in my previous example and added ItemMetadata as a child node under the repeating Purchase node so that the schema structure now looks like the below.

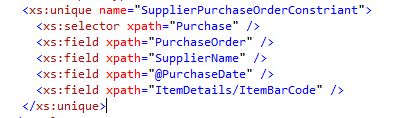

The unique constraint that I had working in the previous blog post specified that if there is more than one Purchase node that contains the same combination of values for the PurchaseOrder, SupplierName, ItemBarCode, ItemDescription and SpecialDeals elements as well as the PurchaseDate attribute then the XML Validator should throw an exception. None of the aforementioned elements or attributes belonged to a namespace since the elementFormDefault and attributeFormDefault on the schema were set to unqualified. I now want to add the DateOfAvailability attribute and Price element which both belong to the namespace http://BizTalk_Server_Project6.Type to the constraint. The namespace must be associated with a prefix and as we have previously seen we can’t do this on the schema level, so the prefix should instead be declared on the constraint level and can then be used on individual field xpaths as below.

Using the namespace prefixes as above the below XML now generates the error “There is a duplicate key sequence ‘123213 Al Pacino PurchaseDate_0 42129841 Anti-dandruff shampoo 10% off 10/11/2013 $13.00’ for the http://uniquepurchases:SupplierPurchaseOrderConstriant key or unique identity constraint.” as expected. If you wanted to make use of the qualified elements or attributes in multiple nodes in multiple unique constraints in the same schema then you would need to declare the namespace prefix on each constraint, potentially reusing the same prefix value since it is scoped to that specific constraint and thus there won’t be a clash.

I’ve uploaded the source code (as a Visual Studio 2012/BizTalk 2013 solution) to my Google drive account if you are interested in giving it a try.

In conclusion, duplicate value checks in a BizTalk solution are very well served by schema validation using unique constraints (even though the schema designer does not support implementing unique constraints and they have to be added manually), and alternatives methods on enforcing such validation would most likely turn out to be much harder to implement, support, and wouldn’t be anywhere near as efficient.