On one of my current projects I had quite a few requirements that required me to make some smart use of BizTalk orchestration capabilities. After some hard work I managed to stitch together an orchestration that functioned exactly as I wanted and performed fantastically given it’s level of complexity, however there was one problem…. My orchestration was so humongous it epitomizes the definition of super-monolithic.

I decided that this was a problem just waiting to happen, as the next developer who came along to modify the solution would look at this orchestration and rethink his career focus altogether. I decided to break this orchestration up into smaller components that weave together to perform the same functionality as the monolith and that I would make a few performance tweaks while I was at it.

A bit of background on my project requirements first… This project requires me to expose one-way WCF services (keep in mind that BizTalk one-way WCF services still send back a void response and implement a request-response interface, but they relate to a one-way receive location), with authentication and authorization happening in the WCF stack, schema resolution with the use of an XML dissassembler pipeline component, many business rules being executed by the BRE Pipeline Framework as well as schema validation in the receive pipeline. If the message passes all the validation then it would be sent to the BizTalk message box at which stage a response is sent back to the WCF client and the message would get routed to the monolith of an orchestration for further processing. The solution demanded a high level of throughput with both the ingestion of messages by the WCF Service and the orchestrations processing 100 messages per second.

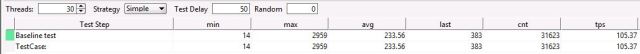

Before breaking things up I decided I would run a baseline performance test using SOAPUI, passing in a static WCF request message to one of the services over 30 threads with a 50 ms delay between service calls over a period of 5 minutes. I found that the CPU on my BizTalk server was running hot at 100% throughout the test with not very heavy memory usage, while my SQL Server was wasn’t running too hot. I also determined that each orchestration instance was creating about 2-3 persistence points on average and that the average time to complete running an orchestration was 570 ms. The first orchestration instance started up within the same second my load test was started, and the last orchestration instance to complete did so in the same second my load test ended.

My SOAPUI load test results are as below and I found that my orchestration completion rate seems to be relatively similar to my WCF ingestion rate (which implies to me that more threads are being used by orchestration than ingestion given that orchestrations take longer to complete than WCF service calls), and I also found that a lot of the server’s CPU was dedicated to my processing host. I was pretty confident that this was the maximum sustainable load for my BizTalk application given the current state of the application source code and configuration and environment configuration.

I then got to work breaking my orchestration up into smaller pieces, and found that it could logically be broken down into 6 different orchestrations, one of which contained my exception handling logic and was reused about 10 times throughout the other 5 orchestrations which is a big plus of breaking up the orchestrations. I decided to use the call orchestration shape rather than using direct binding through the message box or the start orchestration shape as performance was paramount in this solution and I wanted to avoid message box hops and persistence points in my orchestration. My orchestration is also quite procedural in nature and I wanted my parent orchestration to be blocked while waiting for child orchestrations to complete (with the call orchestration shape the child orchestration is executed on the same thread as the parent orchestration and the parent is blocked till the child completes). I got to work creating orchestration parameters in my child orchestrations with parameters being marked as having a direction of In/Out/Ref as my orchestration logic required.

Re-running my load test left me at a loss seeing a drastic drop in performance especially given that while I broke my orchestration up I made what I thought would be performance tweaks as well. My average orchestration completion time was now 797 ms. CPU on my BizTalk server was still running hot at 100% but it looked like more CPU was being dedicated to the orchestration host this time around. Nothing else (memory usage, persistence points, resource usage on SQL server etc…) seemed different from my previous run.

It then struck me that passing the parameters as In parameters (the majority of my parameters had a direction of In) must be causing a fair bit of overhead within the .Net CLR. Typically in .Net passing a reference type as an In parameter would have relatively low overhead while passing a value type as in In parameter would have a higher overhead as a copy of the object would have to be made. Repeating the test with all parameters set to be Ref or Out parameters gave me a much better result with the maximum sustainable throughput now rising (most likely due to efficiencies and streamlining of the called orchestrations) and the average orchestration completion time now dropping to 405 ms.

Further testing highlighted that switching orchestration message parameters from In to Out or Ref parameters resulted in the biggest change in performance, while changes to value type parameters resulted in lower yet still substantial levels of change in performance and changes to reference type parameters appeared to yield no performance implications (this appears to be in line with .Net principles that reference types behave the same way when passed as In or Ref parameters). My colleague Mark Brimble pointed me towards this BizTalk 2004 article which implies that all In parameters (with no exception explicitly stated for reference type parameters) are copied when calling an orchestration which sounds quite different from typical .Net behavior and didn’t match up with my observed results as I expected that reference type In parameters will not result in a copy being made. I decided that I had to prove my theory.

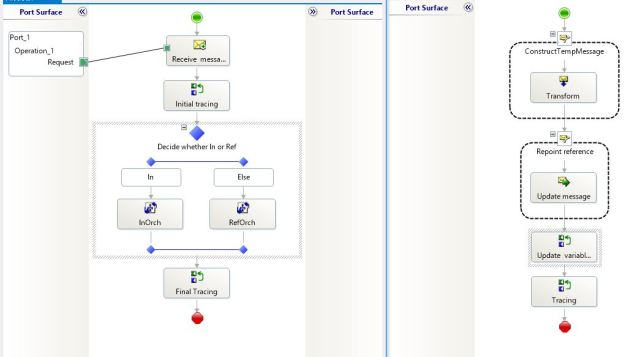

I threw together an orchestration that receives a message with a distinguished element, the orchestration also spins up a variable of a custom class I created calling on the default constructor to set a default value to a contained string property, and also spins up a variable of type int32 with a default value. These values are all traced to a file, and based on the value of the distinguished element in the received message one of two orchestration is called via a call orchestration shape. Both of these orchestrations are used to update the message (messages are immutable so it can’t really be updated, however the reference to the message variable can be updated to point to a new message), custom object, and int32 variables, these updated values then being traced to file as well. The catch is one of these called orchestrations accepts these parameters as Ref parameters and one accepts them as In parameters. The calling orchestration then traces the values of it’s message/variables to the file to see whether they have been updated or not.

The results of this test are below and they matched up with my understanding of .Net behavior.

Based on the above one can surmise that a message behaves similarly to a value type in .Net (maybe not under the hood but in behavior) in that if you pass it as an In parameter and it’s reference is updated in a called orchestration then it’s value won’t be updated in the calling orchestration. The same applies to value types as expected. Reference types will be updated in the calling orchestration regardless whether they were passed in as In or Ref parameters. If passed as a Ref parameter then regardless of the type of parameter it will have it’s value updated in the calling orchestration if updated in a called orchestration.

Now that we have proven the behavior of In/Ref parameters (an Out parameter is effectively like a Ref parameter except for the fact that there is no guarantee that the parameter value was initialized in the caller prior to calling the called orchestration) the big question is why the slowdown with In parameters? The obvious answer for messages and value types is that an actual copy of the value is made for the called orchestration which would definitely have an impact on the CPU, the larger and more complex the footprint of the parameter in question the larger the performance implication.

In summary, if performance is very important to you and you intend to use the call orchestration shape then beware parameters with a direction of In. If the reason you are using call orchestration shapes is purely to break up a large orchestration and you are calling on other orchestrations within your control then it should be quite safe to have a parameter direction of Ref even if you don’t actually require it to be Ref, but obviously this needs to be evaluated on a case by case basis.

Hi!

Thanks for the analysis. As you say, it’s worth keeping the cost of parameter passing in mind, and that in messages are copied. The fact that the speed almost doubled suggests there’s might be more than just copying a bit of memory going on, though.

I don’t find the 2004 paper to be surprising. In parameters in .NET are always copied to the formal parameter, regardless of whether they are value or references. They simply initialise the parameter. Ref parameters are the address of the actual parameter .

I’m a bit confused by this statement though: “reference types behave the same way when passed as In or Ref parameters”. I think I get your point: references are small and cheap to copy, whether they are reference variables or a Ref to the actual argument.

Thanks again.

Steve.

PS. BTW, the C and C++ idea of “const *” was intended to solve the performance issues you’ve identified — passing an address is cheap, and the const protects the original data.

LikeLike

Hi Steve, when I said “reference types behave the same way when passed as In or Ref parameters” I was referring to reference types vs. value types (see this article for an example description of the difference – http://www.albahari.com/valuevsreftypes.aspx). The key here is that regardless whether reference type objects are passed as In or Ref paramteres they will exhibit the behavior of a Ref parameter, with no copies of the object being made but rather pointers (or something like a pointer) to an area in memory being passed around. I’m no .NET guru so excuse my base understanding, but I’m pretty certain that this explains some of the observations I made.

Cheers

Johann

LikeLike

Really interesting post, and thanks for taking the time to document for the wider audience

Cheers,

SteveC.

PS. how did you manage to get the single image of the orchestration ?

LikeLike

Hi Steve, thanks for the positive feedback. I enjoyed investigating this issue and documenting it. I used the BizTalk Documentor to get the single image of the orchestration. It is a fantastic tool and I encourage you to use it to capture the state of BizTalk applications/environments.

Cheers

Johann

LikeLike